Lineage Graph

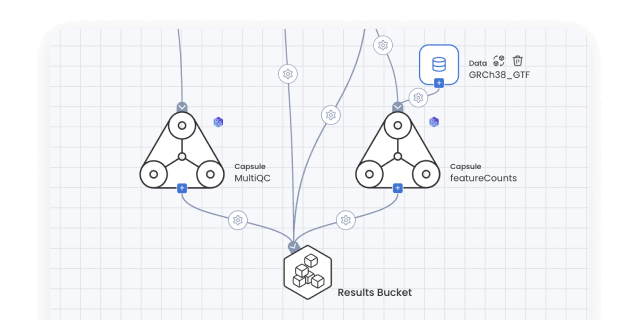

An immutable record of how Result Data are generated within Code Ocean, showing source data, processing steps through Capsules and Pipelines, and Result Data output.

Key capabilities

Create an immutable record of every result

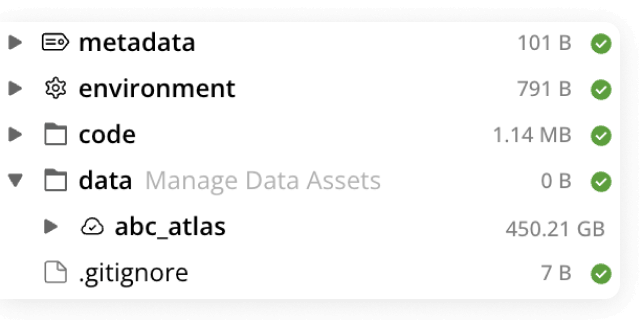

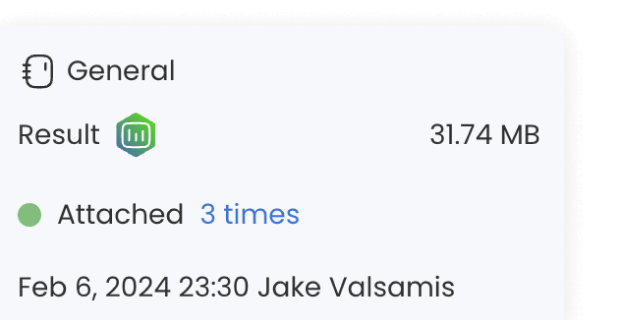

All computational work is tracked in Git, creating an immutable record of all work done and the results produced. Click into any Result to access and view key details at a glance.

See and explore full result provenance

Gain access to the entire story of how your Result was produced—enter any node and get to the exact version and Git commit of a Capsule or Pipeline that generated every single result.

Assess reproducibility for all Result Data

The Lineage Graph assesses the reproducibility of any given Result. Reproducibility can be guaranteed when Capsules and Pipelines are versioned, and when Data are kept internally on the platform.

How the Lineage Graph works with the rest of the Code Ocean platform

Frequently asked questions

Lineage Graph

Lineage Graph

Can you always guarantee reproducibility?

Yes, if all source data has been imported and made immutable. If you’ve used an external data source that is subject to change, reproducibility cannot be guaranteed.

Can you click into each node to see details?

Yes. You can interact with every node on the Lineage Graph. Click on any asset that you have access to and explore the full details of which version was run, when it was run, and who by.

Can we use this to connect to an external lineage tool?

Yes, using our API, you could connect to your own instance of an open-source tool, e.g. OpenLineage.

Built for Computational Science

-

Data analysis

Data analysis

Use ready-made template Compute Capsules to analyze your data, develop your data analysis workflow in your preferred language and IDE using any open-source software, and take advantage of built-in containerization to guarantee reproducibility.

-

Data management

Data management

Manage your organization's data and control who has access to it. Built specifically to meet all FAIR principles, data management in Code Ocean uses custom metadata and controlled vocabularies to ensure consistency and improve searchability.

-

Bioinformatics pipelines

Bioinformatics pipelines

Build, configure and monitor bioinformatics pipelines from scratch using a visual builder for easy set-up. Or, import from nf-core in one click for instant access to a curated set of best practice analysis pipelines. Runs on AWS Batch out-of-the-box, so your pipelines scale automatically. No setup needed.

-

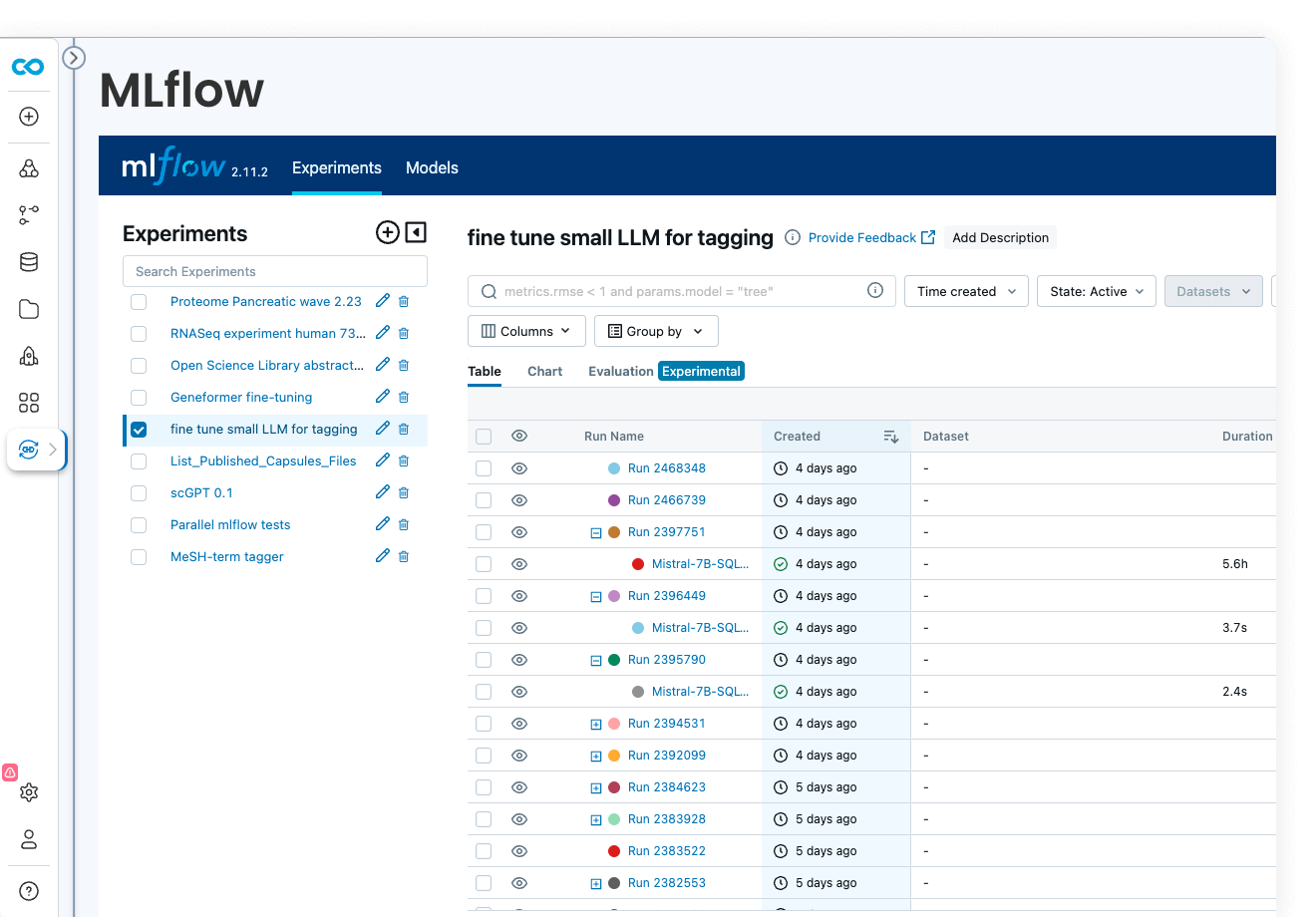

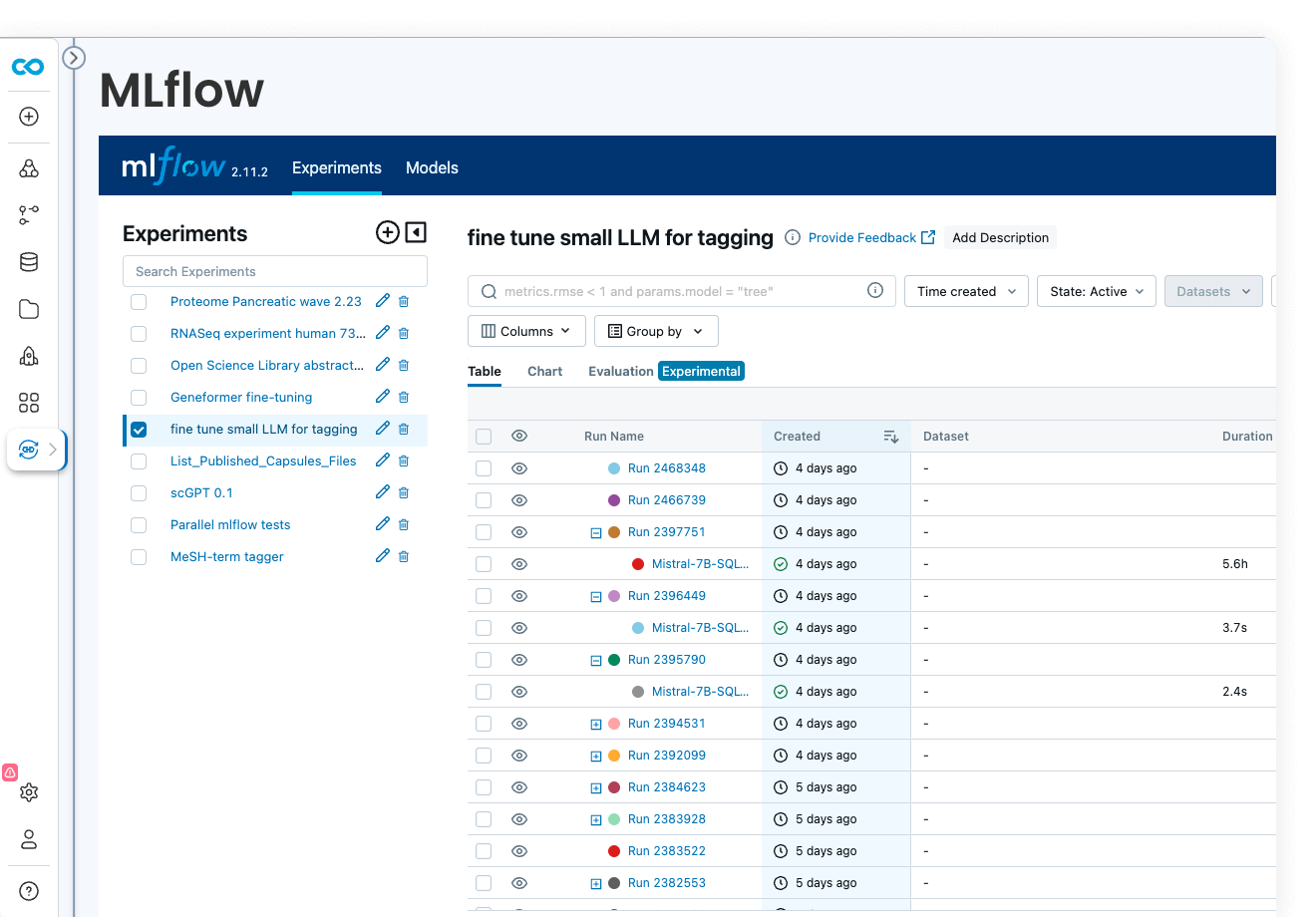

ML models

ML model development

Code Ocean is uniquely suited for Artificial Intelligence, Machine Learning, Deep Learning, and Generative AI. Install GPU-ready environments and provision GPU resources in a few clicks. Integration with MLFlow allows you to develop models, track parameters, manage models from development to production, while enjoying out-of-the-box reproducibility and lineage.

-

Multiomics

Multiomics

Analyze and work with large multimodal datasets efficiently using scalable compute and storage resources, cached packages for R and Python, preloaded multiomics analysis software that works out of the box and full lineage and reproducibility.

-

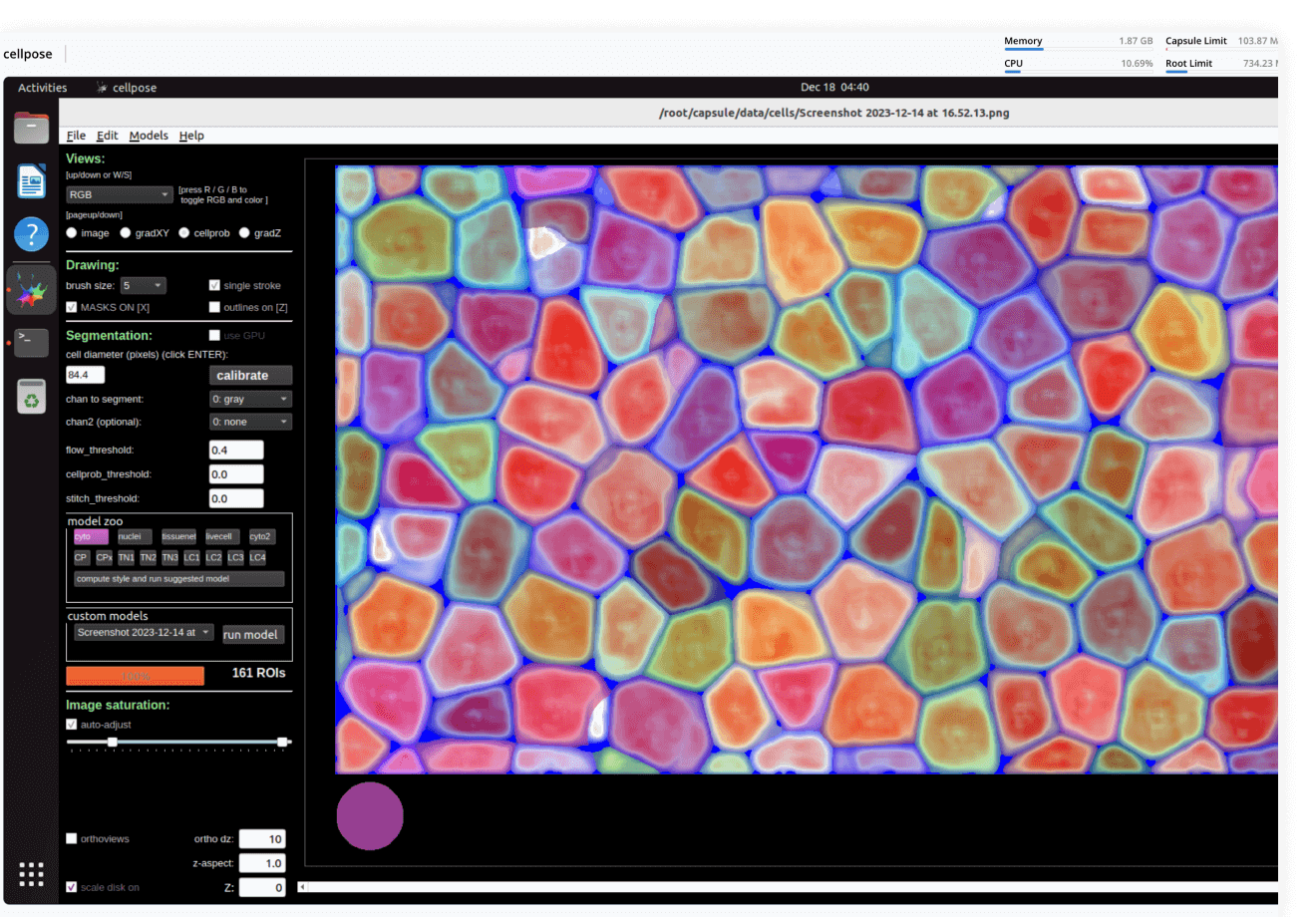

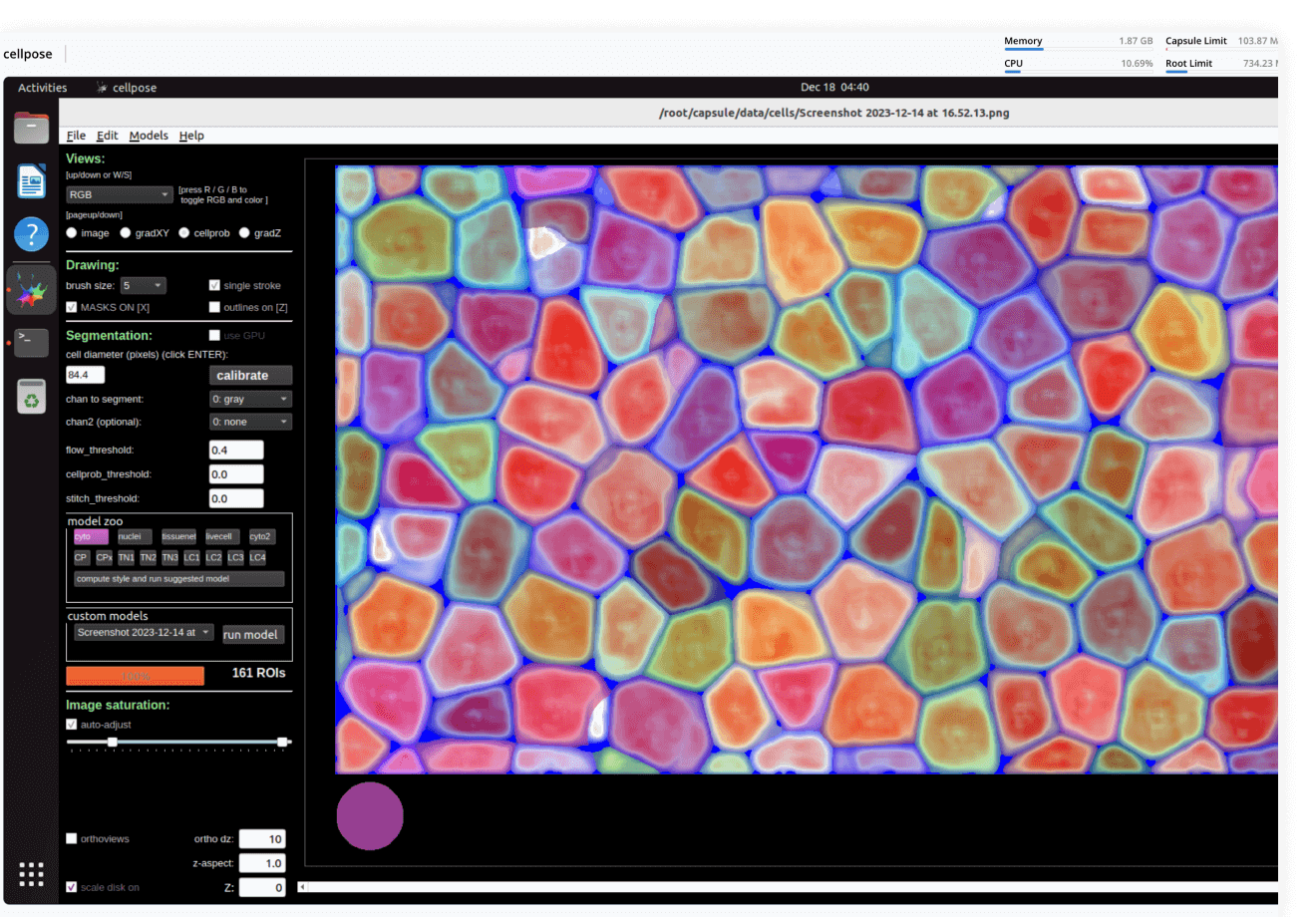

Imaging

Imaging

Process images using a variety of tools: from dedicated desktop applications to custom-written deep learning pipelines, from a few individual files to petabyte-sized datasets. No DevOps required, always with lineage.

-

Cloud management

Cloud management

Code Ocean makes it easy to manage data and provision compute: CPUs, GPUs, and RAM. Assign flex machines and dedicated machines to manage what is available to your users. Spot instances, idleness detection, and automated shutdown help reduce cloud costs.

-

Data/model provenance

Data/model provenance

Keep track of all data and results with automated result provenance and lineage graph generation. Assess reproducibility with a visual representation of every Capsule, Pipeline, and Data asset involved in a computation.

Data analysis

Use ready-made template Compute Capsules to analyze your data, develop your data analysis workflow in your preferred language and IDE using any open-source software, and take advantage of built-in containerization to guarantee reproducibility.

Data management

Manage your organization's data and control who has access to it. Built specifically to meet all FAIR principles, data management in Code Ocean uses custom metadata and controlled vocabularies to ensure consistency and improve searchability.

Bioinformatics pipelines

Build, configure and monitor bioinformatics pipelines from scratch using a visual builder for easy set-up. Or, import from nf-core in one click for instant access to a curated set of best practice analysis pipelines. Runs on AWS Batch out-of-the-box, so your pipelines scale automatically. No setup needed.

ML model development

Code Ocean is uniquely suited for Artificial Intelligence, Machine Learning, Deep Learning, and Generative AI. Install GPU-ready environments and provision GPU resources in a few clicks. Integration with MLFlow allows you to develop models, track parameters, manage models from development to production, while enjoying out-of-the-box reproducibility and lineage.

Multiomics

Analyze and work with large multimodal datasets efficiently using scalable compute and storage resources, cached packages for R and Python, preloaded multiomics analysis software that works out of the box and full lineage and reproducibility.

Imaging

Process images using a variety of tools: from dedicated desktop applications to custom-written deep learning pipelines, from a few individual files to petabyte-sized datasets. No DevOps required, always with lineage.

Cloud management

Code Ocean makes it easy to manage data and provision compute: CPUs, GPUs, and RAM. Assign flex machines and dedicated machines to manage what is available to your users. Spot instances, idleness detection, and automated shutdown help reduce cloud costs.

Data/model provenance

Keep track of all data and results with automated result provenance and lineage graph generation. Assess reproducibility with a visual representation of every Capsule, Pipeline, and Data asset involved in a computation.

.png?length=720&name=September%20Webinar%20-%20On%20Demand%20(1).png)